Abdelhakim Benechehab

Ph.D. student @ Huawei Noah's Ark Lab and EURECOM, a Sorbonne university graduate school.

📫 Paris, France 🇫🇷

Hey, thanks for stopping by! 👋

I am Abdelhakim Benechehab, a third-year Ph.D. student at Huawei Noah’s Ark Lab and EURECOM working on Reinforcement Learning and Foundation Models. I am jointly supervised by Giuseppe Paolo and Maurizio Filippone.

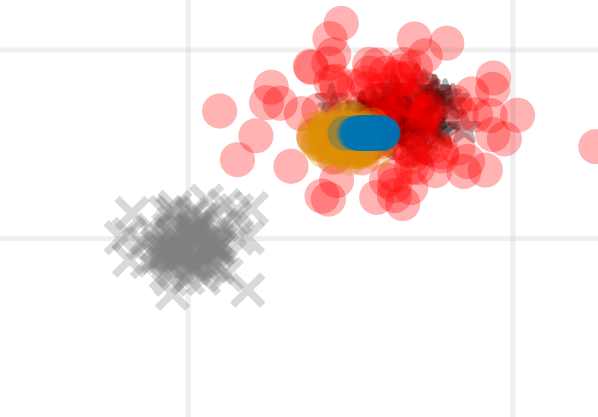

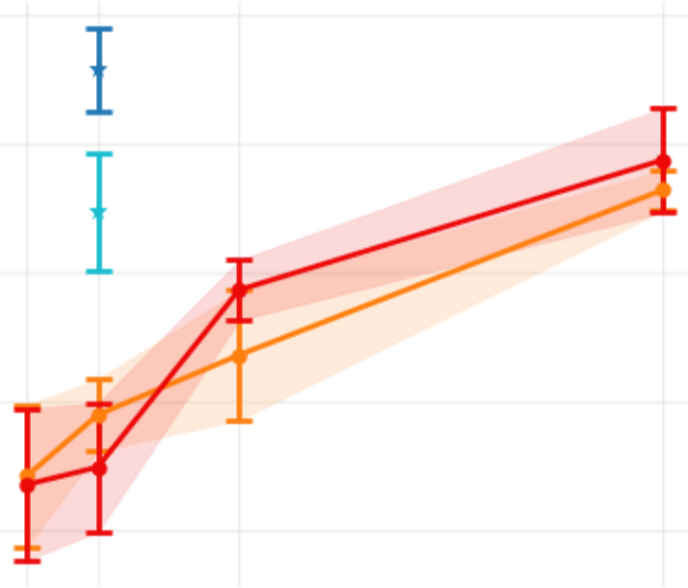

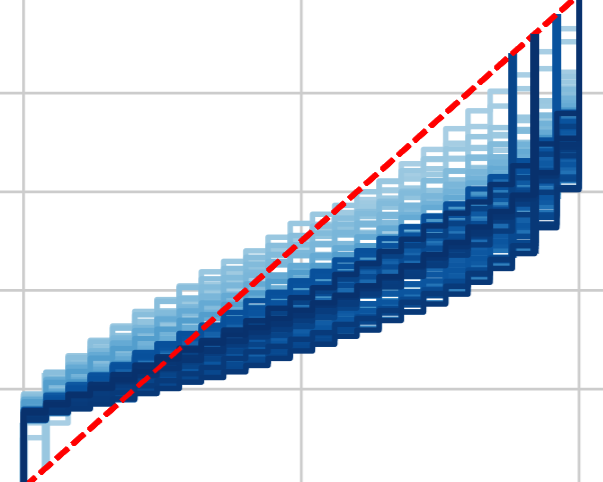

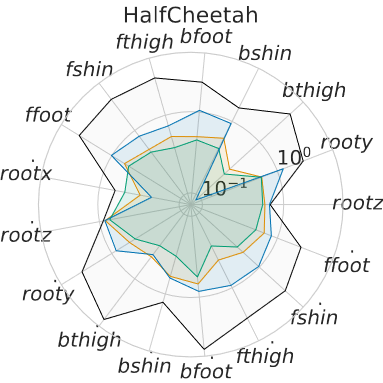

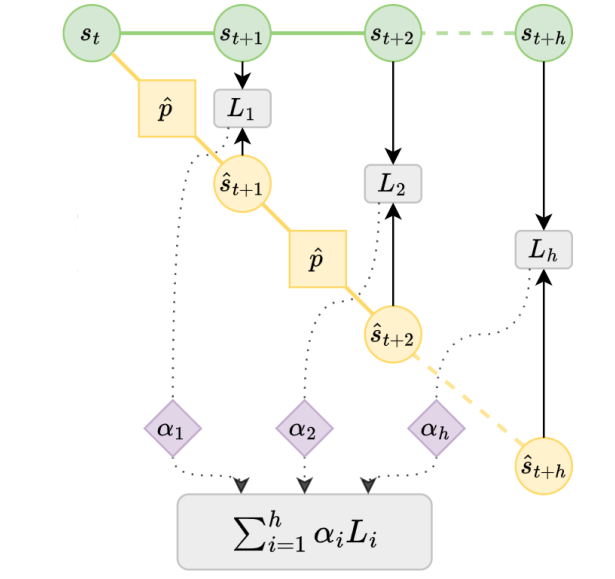

In my research, I explore methods to improve dynamics models in the context of model-based reinforcement learning. This includes developing models suitable for long-horizon planning, and that are aware of their errors through uncertainty estimation. Additionally, I am interested in foundation models, particularly their applications in reinforcement learning, dynamics modeling and time series forecasting.

Previously, I earned my master’s degree at ENS Paris-Saclay in 2021 from the Mathematics, Vision, and Machine Learning (MVA) program. I also hold an engineering degree from École des Mines de Saint-Étienne in mathematics and computer science.

Besides my Ph.D. work, I was a member of the Moroccan NGO Math&Maroc, which aims to promote science and mathematics in my native country, Morocco 🇲🇦. As part of this endeavor, I organized and mentored at the ThinkAI Hackathon and used to host a bi-weekly podcast discussing the latest AI news in Moroccan dialect.

In my spare time, I enjoy sports (primarily volleyball and bouldering), traveling (30+ countries and counting), and learning new things (currently Italian 🇮🇹).

news

| Nov 24, 2025 | 🤗 Attending the NeurIPS@Paris event @ Sorbonne University. |

|---|---|

| Oct 10, 2025 | 📑 New preprint and code: “From Data to Rewards: a Bilevel Optimization Perspective on Maximum Likelihood Estimation”. |

| Sep 22, 2025 | 🥳 1 workshop paper @ NeurIPS 2025: In-Context Meta-Learning with Large Language Models for Automated Model and Hyperparameter Selection. |

| Jul 12, 2025 | ✈️ Attending ICML in Vancouver 🇨🇦 to present AdaPTS (West Exhibition Hall B2-B3 #W-404, 11:00 AM, July 17th). |

| Jun 18, 2025 | 🎤 Invited Webinar organized by MoroccoAI on Adapting Foundation Models. Video. |